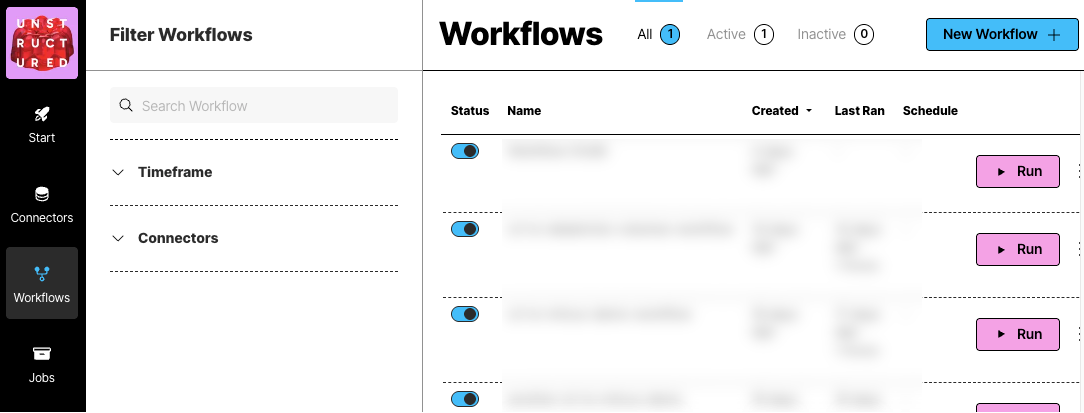

Workflows dashboard

To view the workflows dashboard, on the sidebar, click Workflows.

A workflow in Unstructured is a defined sequence of processes that automate the data handling from source to destination. It allows users to configure how and when data should be ingested, processed, and stored.

Workflows are crucial for establishing a systematic approach to managing data flows within the platform, ensuring consistency, efficiency, and adherence to specific data processing requirements.

To view the workflows dashboard, on the sidebar, click Workflows.

A workflow in Unstructured is a defined sequence of processes that automate the data handling from source to destination. It allows users to configure how and when data should be ingested, processed, and stored.

Workflows are crucial for establishing a systematic approach to managing data flows within the platform, ensuring consistency, efficiency, and adherence to specific data processing requirements.

Create a workflow

Unstructured provides two types of workflow builders:- Automatic or Build it For Me workflows, which use sensible default workflow settings to enable you to get good-quality results faster.

- Custom or Build it Myself workflows, which enable you to fine-tune the workflow settings behind the scenes to get very specific results.

Create an automatic workflow

You must first have an existing source connector and destination connector to add to the workflow.You cannot create an automatic workflow that uses a local source connector.If you do not have an existing remote connector for either your target source (input) or destination (output) location, create the source connector, create the destination connector, and then return here.To see your existing connectors, on the sidebar, click Connectors, and then click Sources or Destinations.

- On the sidebar, click Workflows.

- Click New Workflow.

-

Next to Build it for Me, click Create Workflow.

If a radio button appears instead of Build it for Me, select it, and then click Continue.

- For Workflow Name, enter some unique name for this workflow.

- In the Sources dropdown list, select your source location.

-

In the Destinations dropdown list, select your destination location.

You can select multiple source and destination locations. Files will be ingested from all of the selected source locations, and the processed data will be delivered to all of the selected destination locations.

- Click Continue.

-

The Reprocess All box applies only to blob storage connectors such as the Amazon S3, Azure Blob Storage, and Google Cloud Storage connectors:

- Checking this box reprocesses all documents in the source location on every workflow run.

-

Unchecking this box causes only new documents that are added to the source location, or existing documents that are updated in the source location, since the last workflow run to be processed on future runs. Previously processed documents are not processed again. However:

- Even if this box is unchecked, a renamed file is always treated as a new file, regardless of whether the file’s original contents have changed.

- Even if this box is unchecked, a file that is removed but is added back later with the same file name is processed on future runs only if the file’s contents have changed since the file was originally processed.

- Click Continue.

- If you want this workflow to run on a schedule, in the Repeat Run dropdown list, select one of the scheduling options, and fill in the scheduling settings. Otherwise, select Don’t repeat.

- Click Complete.

-

Partitioner: Auto strategy

Unstructured automatically analyzes and processes files on a page-by-page basis (for PDF files) and on a document-by-document basis for everything else:

- If the page or document has no images and likely does not have tables, Fast partitioning is used, and the page or document is billed at the Fast rate for processing.

- If the page or document has only a few tables or images with standard layouts and languages, High Res partitioning is used, and the page or document is billed at the High Res rate for processing.

- If the page or document has more than a few tables or images, VLM partitioning is used, and the page or document is billed at the VLM rate for processing.

-

Chunker: Chunk by Title strategy

- Contextual Chunking: No (unchecked)

- Combine Text Under N Characters: 3000

- Include Original Elements: Yes (checked)

- Max Characters: 5500

- Multipage Sections: Yes (checked)

- New After N Characters: 3500

- Overlap: 350

- Overlap All: Yes (checked)

-

Embedder:

- Provider: Azure OpenAI

- Model: text-embedding-3-large, with 3072 dimensions

- Enrichments: This workflow contains no enrichments. Learn about available enrichments.

Image summary descriptions, table summary descriptions, and table-to-HTML output is generated only when the Partitioner node in a workflow is set to use the High Res partitioning strategy and

the workflow also contains an image description, table description, or table-to-HTML enrichment node.Setting the Partitioner node to use Auto, VLM, or Fast in a workflow that also contains an image description, table description, or table-to-HTML enrichment node

will not generate any image summary descriptions, table summary descriptions, or table-to-HTML output, and it could also cause the workflow to stop running or produce unexpected results.

- On the sidebar, click Workflows.

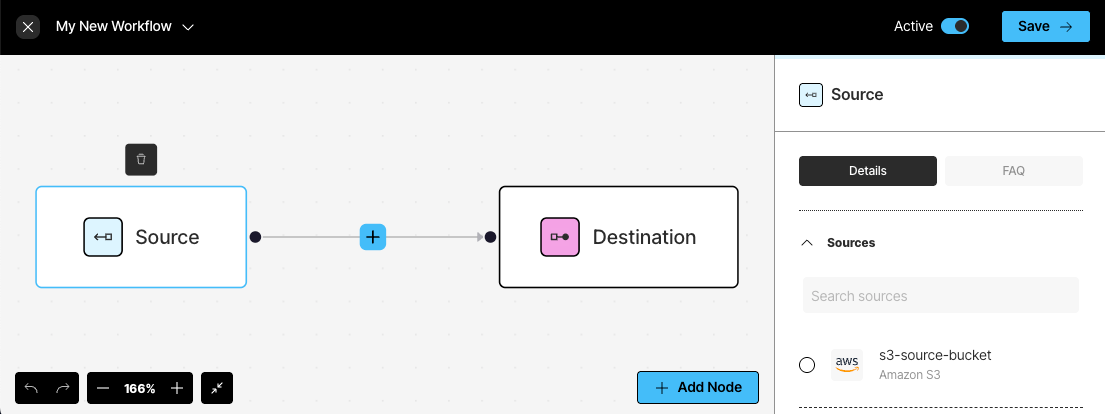

- In the list of available workflows, click the workflow that was just created. This opens a visual designer that shows your workflow as a directed acyclic graph (DAG). This DAG contains a node representing each step in the workflow. There is one node for the partitioning step, another node for the chunking step, and so on.

- To learn how to change a node’s settings or to add enrichment nodes, click the FAQ button in the flyout pane in the workflow DAG designer.

Create a custom workflow

If you already have an existing workflow that you want to change, do the following:

- On the sidebar, click Workflows.

- Click the name of the workflow that you want to change.

- Skip ahead to Step 11 in the following procedure.

You must first have an existing source connector and destination connector to add to the workflow.You can create a custom workflow that uses a local source connector, but you cannot save the workflow.If you do not have an existing connector for either your target source (input) or destination (output) location, create the source connector, create the destination connector, and then return here.To see your existing connectors, on the sidebar, click Connectors, and then click Sources or Destinations.

- On the sidebar, click Workflows.

- Click New Workflow.

- Click the Build it Myself option, and then click Continue.

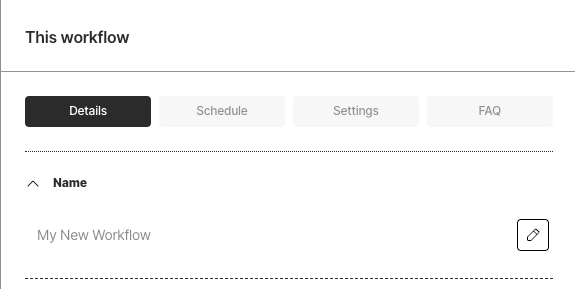

-

In the This workflow pane, click the Details button.

- Next to Name, click the pencil icon, enter some unique name for this workflow, and then click the check mark icon.

- If you want this workflow to run on a schedule, click the Schedule button. In the Repeat Run dropdown list, select one of the scheduling options, and fill in the scheduling settings.

-

To overwrite any previously processed files, or to retry any documents that fail to process, click the Settings button, and check either or both of the boxes.

The Reprocess All Files box applies only to blob storage connectors such as the Amazon S3, Azure Blob Storage, and Google Cloud Storage connectors:

- Checking this box reprocesses all documents in the source location on every workflow run.

-

Unchecking this box causes only new documents that are added to the source locations, or existing documents that are updated in the source location, since the last workflow run to be processed on future runs. Previously processed documents are not processed again. However:

- Even if this box is unchecked, a renamed file is always treated as a new file, regardless of whether the file’s original contents have changed.

- Even if this box is unchecked, a file that is removed but is added back later with the same file name is processed on future runs only if the file’s contents have changed since the file was originally processed.

- The workflow begins with the following layout: The following workflow layouts are also valid:

-

In the pipeline designer, click the Source node. In the Source pane, select the source location. Then click Save.

To use a local source location, do not choose a source connector.If the workflow uses a local source location, in the Source node, drag or click to specify a local file, and then click Test. The workflow’s results are displayed on-screen.A workflow that uses a local source location has the following limitations:

To use a local source location, do not choose a source connector.If the workflow uses a local source location, in the Source node, drag or click to specify a local file, and then click Test. The workflow’s results are displayed on-screen.A workflow that uses a local source location has the following limitations:- You cannot save the workflow.

- You cannot send the results to a remote destination location, even if you have attached a destination connector to the workflow. However, you can save the results to a local JSON-formatted file.

- Click the Destination node. In the Destination pane, select the destination location. Then click Save.

-

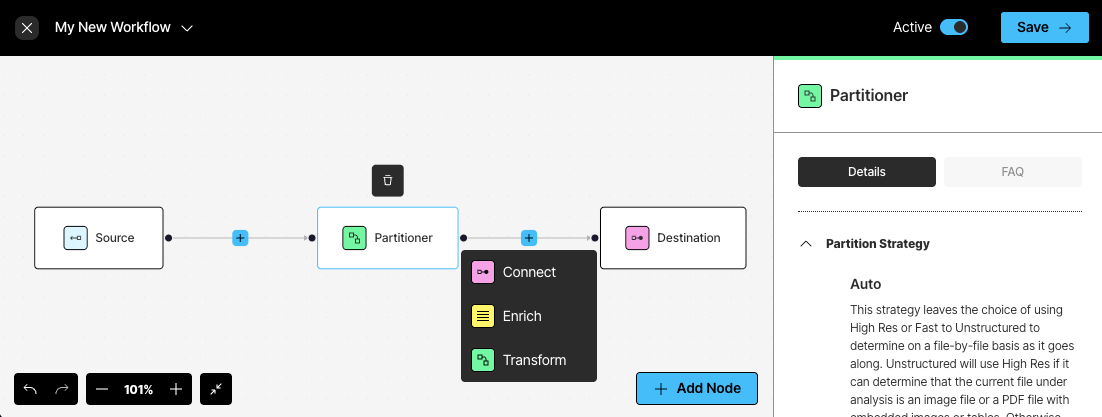

As needed, add more nodes by clicking the plus icon (recommended) or Add Node button:

- Click Connect to add another Source or Destination node. You can add multiple source and destination locations. Files will be ingested from all of the source locations, and the processed data will be delivered to all of the destination locations. Learn more.

-

Click Enrich to add a Chunker or Enrichment node. Learn more.

Image summary descriptions, table summary descriptions, and table-to-HTML output is generated only when the Partitioner node in a workflow is set to use the High Res partitioning strategy and the workflow also contains an image description, table description, or table-to-HTML enrichment node.Setting the Partitioner node to use Auto, VLM, or Fast in a workflow that also contains an image description, table description, or table-to-HTML enrichment node will not generate any image summary descriptions, table summary descriptions, or table-to-HTML output, and it could also cause the workflow to stop running or produce unexpected results.

- Click Transform to add a Partitioner or Embedder node. Learn more.

To edit a node, click that node, and then change its settings. To delete a node, click that node, and then click the trash can icon above it.Make sure to add nodes in the correct order. If you are unsure, see the usage hints in the blue note that appears in the node’s settings pane.

- Click Save.

- If you did not set the workflow to run on a schedule, you can run the worklow now.

Custom workflow node types

Partitioner node

Partitioner node

Choose from one of four available partitioning strategies.Unstructured recommends that you choose the Auto partitioning strategy in most cases. With Auto, Unstructured does all

the heavy lifting, optimizing at runtime for the highest quality at the lowest cost page-by-page.You should consider the following additional strategies only if you are absolutely sure that your documents are of the same

type. Each of the following strategies are best suited for specific situations. Choosing one of these

strategies other than Auto for sets of documents of different types could produce undesirable results,

including reduction in transformation quality.Learn more.

- VLM: For the highest-quality transformation of these file types:

.bmp,.gif,.heic,.jpeg,.jpg,.pdf,.png,.tiff, and.webp. - High Res: For all other supported file types, and for the generation of bounding box coordinates.

- Fast: For text-only documents.

-

Anthropic:

- Anthropic Claude 3.5 Sonnet

-

OpenAI:

- OpenAI GPT-4o

-

Amazon Bedrock:

- Anthropic Claude 3.5 Sonnet

- Anthropic Claude 3 Opus

- Anthropic Claude 3 Haiku

- Anthropic Claude 3 Sonnet

- Amazon Nova Pro

- Amazon Nova Lite

- Meta Llama 3.2 90B Instruct

- Meta Llama 3.2 11B Instruct

-

Vertex AI:

- Gemini 2.0 Flash

When you use the VLM strategy with embeddings for PDF files of 200 or more pages, you might notice some errors when

these files are processed. These errors typically occur when these larger PDF files have lots of tables and high-resolution images.

Chunker node

Chunker node

For Chunkers, select one of the following:

-

Chunk by title: Preserve section boundaries and optionally page boundaries as well. A single chunk will never contain text that occurred in two different sections. When a new section starts, the existing chunk is closed and a new one is started, even if the next element would fit in the prior chunk. Also, specify the following:

- Contextual chunking: When switched on, prepends chunk-specific explanatory context to each chunk. Learn more.

- Combine text under n chars: Combine elements until a section reaches a length of this many characters. The default is 0.

- Include original elements: Check this box to output the elements that were used to form a chunk, to appear in the

metadatafield’sorig_elementsfield for that chunk. By default, this box is unchecked. - Max characters: Cut off new sections after reaching a length of this many characters. This is a strict limit. The default is 2048.

- Multipage sections: Check this box to allow sections to span multiple pages. By default, this box is unchecked.

- New after n chars: Cut off new sections after reaching a length of this many characters. This is an approximate limit. The default is *1500.

- Overlap: Apply a prefix of this many trailing characters from the prior text-split chunk to second and later chunks formed from oversized elements by text-splitting. The default is 160.

- Overlap all: Check this box to apply overlap to “normal” chunks formed by combining whole elements. Use with caution as this can introduce noise into otherwise clean semantic units. By default, this box is unchecked.

-

Chunk by character (also known as basic chunking): Combine sequential elements to maximally fill each chunk. Also, specify the following:

- Contextual chunking: When switched on, prepends chunk-specific explanatory context to each chunk. Learn more.

- Include original elements: Check this box to output the elements that were used to form a chunk, to appear in the

metadatafield’sorig_elementsfield for that chunk. By default, this box is unchecked. - Max characters: Cut off new sections after reaching a length of this many characters. The default is 2048.

- New after n chars: Cut off new sections after reaching a length of this many characters. This is an approximate limit. The default is *1500.

- Overlap: Apply a prefix of this many trailing characters from the prior text-split chunk to second and later chunks formed from oversized elements by text-splitting. The default is 160.

- Overlap All: Check this box to apply overlap to “normal” chunks formed by combining whole elements. Use with caution as this can introduce noise into otherwise clean semantic units. By default, this box is unchecked.

-

Chunk by page: Preserve page boundaries. When a new page is detected, the existing chunk is closed and a new one is started, even if the next element would fit in the prior chunk. Also, specify the following:

- Contextual chunking: When switched on, prepends chunk-specific explanatory context to each chunk. Learn more.

- Include original elements: Check this box to output the elements that were used to form a chunk, to appear in the

metadatafield’sorig_elementsfield for that chunk. By default, this box is unchecked. - Max characters: Cut off new sections after reaching a length of this many characters. This is a strict limit. The default is 500.

- New after n chars: Cut off new sections after reaching a length of this many characters. This is an approximate limit. The default is 50.

- Overlap: Apply a prefix of this many trailing characters from the prior text-split chunk to second and later chunks formed from oversized elements by text-splitting. The default is 30.

- Overlap all: Check this box to apply overlap to “normal” chunks formed by combining whole elements. Use with caution as this can introduce noise into otherwise clean semantic units. By default, this box is unchecked.

-

Chunk by similarity: Use the sentence-transformers/multi-qa-mpnet-base-dot-v1 embedding model to identify topically similar sequential elements and combine them into chunks. Also, specify the following:

- Contextual chunking: When switched on, prepends chunk-specific explanatory context to each chunk. Learn more.

- Include original elements: Check this box to output the elements that were used to form a chunk, to appear in the

metadatafield’sorig_elementsfield for that chunk. By default, this box is unchecked. - Max characters: Cut off new sections after reaching a length of this many characters. This is a strict limit. The default is 500.

- Similarity threshold: Specify a threshold between 0 and 1 exclusive (0.01 to 0.99 inclusive), where 0 indicates completely dissimilar vectors and 1 indicates identical vectors, taking into consider the trade-offs between precision (a higher threshold) and recall (a lower threshold). The default is 0.5. Learn more.

Enrichment node

Enrichment node

Choose one of the following:

Image summary descriptions, table summary descriptions, and table-to-HTML output is generated only when the Partitioner node in a workflow is set to use the High Res partitioning strategy and

the workflow also contains an image description, table description, or table-to-HTML enrichment node.Setting the Partitioner node to use Auto, VLM, or Fast in a workflow that also contains an image description, table description, or table-to-HTML enrichment node

will not generate any image summary descriptions, table summary descriptions, or table-to-HTML output, and it could also cause the workflow to stop running or produce unexpected results.

-

Image to summarize images. Also select one of the following provider (and model) combinations to use:

- OpenAI (GPT-4o). Learn more.

- Anthropic (Claude 3.5 Sonnet). Learn more.

- Amazon Bedrock (Claude 3.5 Sonnet). Learn more.

-

Table to summarize tables. Also select one of the following provider (and model) combinations to use:

- OpenAI (GPT-4o). Learn more.

- Anthropic (Claude 3.5 Sonnet). Learn more.

- Amazon Bedrock (Claude 3.5 Sonnet). Learn more.

-

Table to convert tables to HTML. Also select one of the following provider (and model) combinations to use:

- OpenAI (GPT-4o). Learn more.

-

Text to generate a list of recognized entities and their relationships by using a technique called named entity recognition (NER).

Also select one of the following provider (and model) combinations to use:

- OpenAI (GPT-4o). Learn more.

- Anthropic (Claude 3.5 Sonnet). Learn more.

Embedder node

Embedder node

For Select Embedding Model, select one of the following:

-

Azure OpenAI: Use Azure OpenAI to generate embeddings with one of the following models:

- text-embedding-3-small, with 1536 dimensions.

- text-embedding-3-large, with 3072 dimensions.

- Ada 002 (Text) (

text-embedding-ada-002), with 1536 dimensions.

-

Amazon Bedrock: Use Amazon Bedrock to generate embeddings with one of the following models:

- Titan Text Embeddings V2, with 1024 dimensions. Learn more.

- Titan Embeddings G1 - Text, with 1536 dimensions. Learn more.

- Titan Multimodal Embeddings G1, with 1024 dimensions. Learn more.

- Cohere Embed English, with 1024 dimensions. Learn more.

- Cohere Embed Multilingual, with 1024 dimensions. Learn more.

-

TogetherAI: Use TogetherAI to generate embeddings with one of the following models:

- M2-BERT-80M-2K-Retrieval, with 768 dimensions.

- M2-BERT-80M-8K-Retrieval, with 768 dimensions.

- M2-BERT-80M-32K-Retrieval, with 768 dimensions.

-

Voyage AI: Use Voyage AI to generate embeddings with one of the following models:

- voyage-code-2, with 1536 dimensions.

- voyage-3, with 1024 dimensions.

- voyage-3-large, with 1024 dimensions.

- voyage-3-lite, with 512 dimensions.

- voyage-code-3, with 1024 dimensions.

- voyage-finance-2, with 1024 dimensions.

- voyage-law-2, with 1024 dimensions.

- voyage-multimodal-3, with 1024 dimensions.

Edit, delete, or run a workflow

To run a workflow once, manually:- On the sidebar, click Workflows.

- In the list of workflows, click Run in the row for the workflow that you want to run.

- Edit via Form: Changes the existing configuration of your workflow.

- Delete: Removes the workflow from the platform. Use this action cautiously, as it will permanently delete the workflow and its configurations.

- Open: Opens the workflow’s settings page.

Pause a scheduled workflow

To stop running a workflow that is set to run on a repeating schedule:- On the sidebar, click Workflows.

- In the list of workflows, turn off the Status toggle in the row for the workflow that you want to stop running on a repeated schedule.

Duplicate a workflow

To duplicate (copy or clone) a workflow:- On the sidebar, click Workflows.

- In the list of workflows, click the ellipses (the three dots) in the row for the workflow that you want to duplicate.

- Click Duplicate. A duplicate of the workflow is created with the same configuration as the original workflow. The duplicate workflow has the same display name as the original workflow but with (Copy) at the end.